new TRTC()

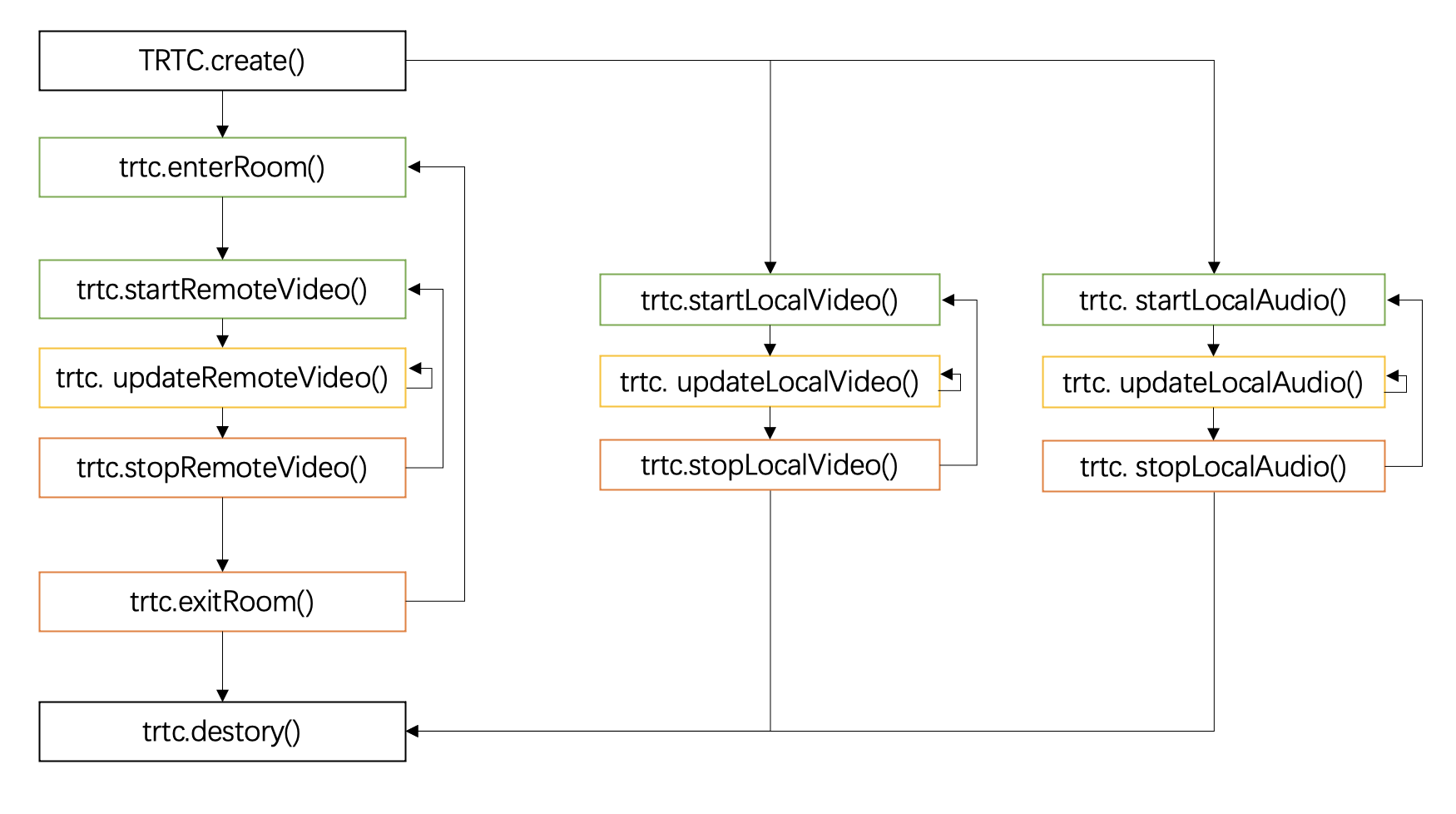

The TRTC object is created using TRTC.create() and provides core real-time audio and video capabilities:

- Enter an audio and video room enterRoom()

- Exit the current audio and video room exitRoom()

- Preview/publish local video startLocalVideo()

- Capture/publish local audio startLocalAudio()

- Stop previewing/publishing local video stopLocalVideo()

- Stop capturing/publishing local audio stopLocalAudio()

- Watch remote video startRemoteVideo()

- Stop watching remote video stopRemoteVideo()

- Mute/unmute remote audio muteRemoteAudio()

The TRTC lifecycle is shown in the following figure:

Methods

(static) create() → {TRTC}

Create a TRTC object for implementing functions such as entering a room, previewing, publishing, and subscribing streams.

Note:

- You must create a TRTC object first and call its methods and listen to its events to implement various functions required by the business.

Example

// Create a TRTC object

const trtc = TRTC.create();Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

options.plugins |

Array |

List of registered plugins (optional). |

|

options.enableSEI |

boolean |

false

|

Whether to enable SEI sending and receiving function (optional). Reference document |

options.assetsPath |

string |

The address of the static resource file that the plugin depends on (optional).

|

|

options.userDefineRecordId |

string |

is used to set the userDefineRecordId of cloud recording (optional).

|

Returns:

TRTC object

- Type

- TRTC

(async) enterRoom(options)

Enter a video call room.

- Entering a room means starting a video call session. Only after entering the room successfully can you make audio and video calls with other users in the room.

- You can publish local audio and video streams through startLocalVideo() and startLocalAudio() respectively. After successful publishing, other users in the room will receive the REMOTE_AUDIO_AVAILABLE and REMOTE_VIDEO_AVAILABLE event notifications.

- By default, the SDK automatically plays remote audio. You need to call startRemoteVideo() to play remote video.

Example

const trtc = TRTC.create();

await trtc.enterRoom({ roomId: 8888, sdkAppId, userId, userSig });Parameters:

| Name | Type | Description | ||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

options |

object |

required

Enter room parameters Properties

|

Throws:

(async) exitRoom()

Exit the current audio and video call room.

- After exiting the room, the connection with remote users will be closed, and remote audio and video will no longer be received and played, and the publishing of local audio and video will be stopped.

- The capture and preview of the local camera and microphone will not stop. You can call stopLocalVideo() and stopLocalAudio() to stop capturing local microphone and camera.

Example

await trtc.exitRoom();Throws:

(async) switchRoom(options)

Switch a video call room.

- Switch the video call room that the audience is watching in live scene. It is faster than exit old room and then entering the new room, and can optimize the opening time in live broadcast and other scenarios.

- Contact us to enable this API.

Example

const trtc = TRTC.create();

await trtc.enterRoom({

...

roomId: 8888,

scene: TRTC.TYPE.SCENE_LIVE,

role: TRTC.TYPE.ROLE_AUDIENCE

});

await trtc.switchRoom({

userSig,

roomId: 9999

});Parameters:

| Name | Type | Description | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

options |

object |

required

Switch room parameters Properties

|

Throws:

(async) switchRole(role, optionopt)

Switches the user role, only effective in TRTC.TYPE.SCENE_LIVE interactive live streaming mode.

In interactive live streaming mode, a user may need to switch between "audience" and "anchor". You can determine the role through the role field in enterRoom(), or switch roles after entering the room through switchRole.

- Audience switches to anchor, call trtc.switchRole(TRTC.TYPE.ROLE_ANCHOR) to convert the user role to TRTC.TYPE.ROLE_ANCHOR anchor role, and then call startLocalVideo() and startLocalAudio() to publish local audio and video as needed.

- Anchor switches to audience, call trtc.switchRole(TRTC.TYPE.ROLE_AUDIENCE) to convert the user role to TRTC.TYPE.ROLE_AUDIENCE audience role. If there is already published local audio and video, the SDK will cancel the publishing of local audio and video.

Notice:

- This interface can only be called after entering the room successfully.

- After closing the camera and microphone, it is recommended to switch to the audience role in time to avoid the anchor role occupying the resources of 50 upstreams.

Examples

// After entering the room successfully

// TRTC.TYPE.SCENE_LIVE interactive live streaming mode, audience switches to anchor

await trtc.switchRole(TRTC.TYPE.ROLE_ANCHOR);

// Switch from audience role to anchor role and start streaming

await trtc.startLocalVideo();

// TRTC.TYPE.SCENE_LIVE interactive live streaming mode, anchor switches to audience

await trtc.switchRole(TRTC.TYPE.ROLE_AUDIENCE);// Since v5.3.0+

await trtc.switchRole(TRTC.TYPE.ROLE_ANCHOR, { privateMapKey: 'your new privateMapKey' });Parameters:

| Name | Type | Description | ||||||

|---|---|---|---|---|---|---|---|---|

role |

string |

required

User role

|

||||||

option |

object |

Properties

|

Throws:

destroy()

Destroy the TRTC instance

After exiting the room, if the business side no longer needs to use trtc, you need to call this interface to destroy the trtc instance in time and release related resources.

Note:

- The trtc instance after destruction cannot be used again.

- If you have entered the room, you need to call the TRTC.exitRoom interface to exit the room successfully before calling this interface to destroy trtc.

Example

// When the call is over

await trtc.exitRoom();

// If the trtc is no longer needed, destroy the trtc and release the reference.

trtc.destroy();

trtc = null;Throws:

(async) startLocalAudio(configopt)

Start collecting audio from the local microphone and publish it to the current room.

- When to call: can be called before or after entering the room, cannot be called repeatedly.

- Only one microphone can be opened for a trtc instance. If you need to open another microphone for testing in the case of already opening one microphone, you can create multiple trtc instances to achieve it.

Examples

// Collect the default microphone and publish

await trtc.startLocalAudio();// The following is a code example for testing microphone volume, which can be used for microphone volume detection.

trtc.enableAudioVolumeEvaluation();

trtc.on(TRTC.EVENT.AUDIO_VOLUME, event => { });

// No need to publish audio for testing microphone

await trtc.startLocalAudio({ publish: false });

// After the test is completed, turn off the microphone

await trtc.stopLocalAudio();Parameters:

| Name | Type | Description | ||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

object |

Configuration item Properties

|

Throws:

(async) updateLocalAudio(configopt)

Update the configuration of the local microphone.

- When to call: This interface needs to be called after startLocalAudio() is successful and can be called multiple times.

- This method uses incremental update: only update the passed parameters, and keep the parameters that are not passed unchanged.

Example

// Switch microphone

const microphoneList = await TRTC.getMicrophoneList();

if (microphoneList[1]) {

await trtc.updateLocalAudio({ option: { microphoneId: microphoneList[1].deviceId }});

}Parameters:

| Name | Type | Description | |||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

object |

Properties

|

Throws:

(async) stopLocalAudio()

Stop collecting and publishing the local microphone.

- If you just want to mute the microphone, please use updateLocalAudio({ mute: true }). Refer to: Turn On/Off Camera/Mic.

Example

await trtc.stopLocalAudio();Throws:

(async) startLocalVideo(configopt)

Start collecting video from the local camera, play the camera's video on the specified HTMLElement tag, and publish the camera's video to the current room.

- When to call: can be called before or after entering the room, but cannot be called repeatedly.

- Only one camera can be started per TRTC instance. If you need to start another camera for testing while one camera is already started, you can create multiple TRTC instances to achieve this.

Examples

// Preview and publish the camera

await trtc.startLocalVideo({

view: document.getElementById('localVideo'), // Preview the video on the element with the DOM elementId of localVideo.

});// Preview the camera without publishing. Can be used for camera testing.

const config = {

view: document.getElementById('localVideo'), // Preview the video on the element with the DOM elementId of localVideo.

publish: false // Do not publish the camera

}

await trtc.startLocalVideo(config);

// Call updateLocalVideo when you need to publish the video

await trtc.updateLocalVideo({ publish: true });// Use a specified camera.

const cameraList = await TRTC.getCameraList();

if (cameraList[0]) {

await trtc.startLocalVideo({

view: document.getElementById('localVideo'), // Preview the video on the element with the DOM elementId of localVideo.

option: {

cameraId: cameraList[0].deviceId,

}

});

}

// Use the front camera on a mobile device.

await trtc.startLocalVideo({ view, option: { useFrontCamera: true }});

// Use the rear camera on a mobile device.

await trtc.startLocalVideo({ view, option: { useFrontCamera: false }});Parameters:

| Name | Type | Description | ||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

object |

Properties

|

Throws:

(async) updateLocalVideo(configopt)

Update the local camera configuration.

- This interface needs to be called after startLocalVideo() is successful.

- This interface can be called multiple times.

- This method uses incremental update: only updates the passed-in parameters, and keeps the parameters that are not passed in unchanged.

Examples

// Switch camera

const cameraList = await TRTC.getCameraList();

if (cameraList[1]) {

await trtc.updateLocalVideo({ option: { cameraId: cameraList[1].deviceId }});

}// Stop publishing video, but keep local preview

await trtc.updateLocalVideo({ publish:false });Parameters:

| Name | Type | Description | |||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

object |

Properties

|

Throws:

(async) stopLocalVideo()

Stop capturing, previewing, and publishing the local camera.

- If you only want to stop publishing video but keep the local camera preview, you can use the updateLocalVideo({ publish:false )} method.

Example

await trtc.stopLocalVideo();Throws:

(async) startScreenShare(configopt)

Start screen sharing.

- After starting screen sharing, other users in the room will receive the REMOTE_VIDEO_AVAILABLE event, with streamType as STREAM_TYPE_SUB, and other users can play screen sharing through startRemoteVideo.

Example

// Start screen sharing

await trtc.startScreenShare();Parameters:

| Name | Type | Description | ||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

object |

Properties

|

Throws:

(async) updateScreenShare(configopt)

Update screen sharing configuration

- This interface needs to be called after startScreenShare() is successful.

- This interface can be called multiple times.

- This method uses incremental update: only update the passed-in parameters, and keep the parameters that are not passed-in unchanged.

Example

// Stop screen sharing, but keep the local preview of screen sharing

await trtc.updateScreenShare({ publish:false });Parameters:

| Name | Type | Description | |||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

object |

Properties

|

Throws:

(async) stopScreenShare()

Stop screen sharing.

Example

await trtc.stopScreenShare();Throws:

(async) startRemoteVideo(configopt)

Play remote video

- When to call: Call after receiving the TRTC.on(TRTC.EVENT.REMOTE_VIDEO_AVAILABLE) event.

Example

trtc.on(TRTC.EVENT.REMOTE_VIDEO_AVAILABLE, ({ userId, streamType }) => {

// You need to place the video container in the DOM in advance, and it is recommended to use `${userId}_${streamType}` as the element id.

trtc.startRemoteVideo({ userId, streamType, view: `${userId}_${streamType}` });

})Parameters:

| Name | Type | Description | |||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

object |

Properties

|

Throws:

(async) updateRemoteVideo(configopt)

Update remote video playback configuration

- This method should be called after startRemoteVideo is successful.

- This method can be called multiple times.

- This method uses incremental updates, so only the configuration items that need to be updated need to be passed in.

Example

const config = {

view: document.getElementById(userId), // you can use a new view to update the position of video.

userId,

streamType: TRTC.TYPE.STREAM_TYPE_MAIN

}

await trtc.updateRemoteVideo(config);Parameters:

| Name | Type | Description | |||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

object |

Properties

|

Throws:

(async) stopRemoteVideo(config)

Used to stop remote video playback.

Example

// Stop playing all remote users

await trtc.stopRemoteVideo({ userId: '*' });Parameters:

| Name | Type | Description | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

config |

object |

required

Remote video configuration Properties

|

Throws:

(async) muteRemoteAudio(userId, mute)

Mute a remote user and stop subscribing audio data from that user. Only effective for the current user, other users in the room can still hear the muted user's voice.

Note:

- By default, after entering the room, the SDK will automatically play remote audio. You can call this interface to mute or unmute remote users.

- If the parameter autoReceiveAudio = false is passed in when entering the room, remote audio will not be played automatically. When audio playback is required, you need to call this method (mute is passed in false) to play remote audio.

- This interface is effective before or after entering the room (enterRoom), and the mute state will be reset to false after exiting the room (exitRoom).

- If you want to continue subscribing audio data from the user but not play it, you can call setRemoteAudioVolume(userId, 0)

Example

// Mute all remote users

await trtc.muteRemoteAudio('*', true);Parameters:

| Name | Type | Description |

|---|---|---|

userId |

string |

required

Remote user ID, '*' represents all users. |

mute |

boolean |

required

Whether to mute |

Throws:

setRemoteAudioVolume(userId, volume)

Used to control the playback volume of remote audio.

- Starting from version v5.12.1, this setting only needs to be configured once, and there is no need to reset it when remote users rejoin the room. For versions prior to v5.12.1, if a remote user rejoins the room, the volume setting needs to be reconfigured.

- Not supported by iOS Safari

Example

await trtc.setRemoteAudioVolume('123', 90);Parameters:

| Name | Type | Description |

|---|---|---|

userId |

string |

required

Remote user ID. '*' represents all remote users. |

volume |

number |

required

Volume, ranging from 0 to 100. The default value is 100. |

(async) startPlugin(plugin, options) → {Promise.<void>}

start plugin

| pluginName | name | tutorial | param |

|---|---|---|---|

| 'AudioMixer' | Audio Mixer Plugin | Music and Audio Effects | AudioMixerOptions |

| 'AIDenoiser' | AI Denoiser Plugin | Implement AI noise reduction | AIDenoiserOptions |

| 'VirtualBackground' | Virtual Background Plugin | Enable Virtual Background | VirtualBackgroundOptions |

| 'Watermark' | Watermark Plugin | Enable Watermark Plugin | WatermarkOptions |

| 'SmallStreamAutoSwitcher' | Small Stream Auto Switcher Plugin | Enable Small Stream Auto Switcher Plugin | SmallStreamAutoSwitcherOptions |

| 'VoiceChanger' | Voice Changer Plugin | Enable Voice Changer | VoiceChangerStartOptions |

Parameters:

| Name | Type | Description |

|---|---|---|

plugin |

PluginName |

required

|

options |

AudioMixerOptions | AIDenoiserOptions | VirtualBackgroundOptions | WatermarkOptions | SmallStreamAutoSwitcherOptions | VoiceChangerStartOptions |

required

|

Returns:

- Type

- Promise.<void>

(async) updatePlugin(plugin, options) → {Promise.<void>}

Update plugin

| pluginName | name | tutorial | param |

|---|---|---|---|

| 'AudioMixer' | Audio Mixer Plugin | Music and Audio Effects | UpdateAudioMixerOptions |

| 'AIDenoiser' | AI Denoiser Plugin | Implement AI noise reduction | AIDenoiserOptions |

| 'VirtualBackground' | Virtual Background Plugin | Enable Virtual Background | VirtualBackgroundOptions |

| 'Watermark' | Watermark Plugin | Enable Watermark Plugin | WatermarkOptions |

| 'SmallStreamAutoSwitcher' | Small Stream Auto Switcher | Enable Small Stream Auto Switcher Plugin | SmallStreamAutoSwitcherOptions |

| 'VoiceChanger' | Voice Changer Plugin | Enable Voice Changer | VoiceChangerUpdateOptions |

Parameters:

| Name | Type | Description |

|---|---|---|

plugin |

PluginName |

required

|

options |

UpdateAudioMixerOptions | AIDenoiserOptions | VirtualBackgroundOptions | WatermarkOptions | SmallStreamAutoSwitcherOptions | VoiceChangerUpdateOptions |

required

|

Returns:

- Type

- Promise.<void>

(async) stopPlugin(plugin, options) → {Promise.<void>}

Stop plugin

| pluginName | name | tutorial | param |

|---|---|---|---|

| 'AudioMixer' | AudioMixer Plugin | Music and Audio Effects | StopAudioMixerOptions |

| 'AIDenoiser' | AIdenoiser Plugin | Implement AI noise reduction | |

| 'VirtualBackground' | Virtual Background Plugin | Enable Virtual Background | |

| 'Watermark' | Watermark Plugin | Enable Watermark Plugin | |

| 'SmallStreamAutoSwitcher' | Small Stream Auto Switcher Plugin | Enable Small Stream Auto Switcher Plugin | |

| 'VoiceChanger' | Voice Changer Plugin | Enable Voice Changer |

Parameters:

| Name | Type | Description |

|---|---|---|

plugin |

PluginName |

required

|

options |

StopAudioMixerOptions |

required

|

Returns:

- Type

- Promise.<void>

enableAudioVolumeEvaluation(intervalopt, enableInBackgroundopt)

Enables or disables the volume callback.

- After enabling this function, whether someone is speaking in the room or not, the SDK will regularly throw the TRTC.on(TRTC.EVENT.AUDIO_VOLUME) event, which feedbacks the volume evaluation value of each user.

Example

trtc.on(TRTC.EVENT.AUDIO_VOLUME, event => {

event.result.forEach(({ userId, volume }) => {

const isMe = userId === ''; // When userId is an empty string, it represents the local microphone volume.

if (isMe) {

console.log(`my volume: ${volume}`);

} else {

console.log(`user: ${userId} volume: ${volume}`);

}

})

});

// Enable volume callback and trigger the event every 1000ms

trtc.enableAudioVolumeEvaluation(1000);

// To turn off the volume callback, pass in an interval value less than or equal to 0

trtc.enableAudioVolumeEvaluation(-1);Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

interval |

number |

2000

|

Used to set the time interval for triggering the volume callback event. The default is 2000(ms), and the minimum value is 100(ms). If set to less than or equal to 0, the volume callback will be turned off. |

enableInBackground |

boolean |

false

|

For performance reasons, when the page switches to the background, the SDK will not throw volume callback events. If you need to receive volume callback events when the page is switched to the background, you can set this parameter to true. |

on(eventName, handler, context)

Listen to TRTC events

For a detailed list of events, please refer to: TRTC.EVENT

Example

trtc.on(TRTC.EVENT.REMOTE_VIDEO_AVAILABLE, event => {

// REMOTE_VIDEO_AVAILABLE event handler

});Parameters:

| Name | Type | Description |

|---|---|---|

eventName |

string |

required

Event name |

handler |

function |

required

Event callback function |

context |

context |

required

Context |

off(eventName, handler, context)

Remove event listener

Example

trtc.on(TRTC.EVENT.REMOTE_USER_ENTER, function peerJoinHandler(event) {

// REMOTE_USER_ENTER event handler

console.log('remote user enter');

trtc.off(TRTC.EVENT.REMOTE_USER_ENTER, peerJoinHandler);

});

// Remove all event listeners

trtc.off('*');Parameters:

| Name | Type | Description |

|---|---|---|

eventName |

string |

required

Event name. Passing in the wildcard '*' will remove all event listeners. |

handler |

function |

required

Event callback function |

context |

context |

required

Context |

getAudioTrack(configopt) → (nullable) {MediaStreamTrack}

Get audio track

Example

// Version before v5.4.3

trtc.getAudioTrack(); // Get local microphone audioTrack, captured by trtc.startLocalAudio()

trtc.getAudioTrack('remoteUserId'); // Get remote audioTrack

// Since v5.4.3+, you can get local screen audioTrack by passing the streamType = TRTC.STREAM_TYPE_SUB

trtc.getAudioTrack({ streamType: TRTC.STREAM_TYPE_SUB });

// Since v5.8.2+, you can get the processed audioTrack by passing processed = true

trtc.getAudioTrack({ processed: true });Parameters:

| Name | Type | Description | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

Object | string |

If not passed, get the local microphone audioTrack Properties

|

Returns:

Audio track

- Type

- MediaStreamTrack

getVideoTrack(configopt) → {MediaStreamTrack|null}

Get video track

Example

// Get local camera videoTrack

const videoTrack = trtc.getVideoTrack();

// Get local screen sharing videoTrack

const screenVideoTrack = trtc.getVideoTrack({ streamType: TRTC.TYPE.STREAM_TYPE_SUB });

// Get remote user's main stream videoTrack

const remoteMainVideoTrack = trtc.getVideoTrack({ userId: 'test', streamType: TRTC.TYPE.STREAM_TYPE_MAIN });

// Get remote user's sub stream videoTrack

const remoteSubVideoTrack = trtc.getVideoTrack({ userId: 'test', streamType: TRTC.TYPE.STREAM_TYPE_SUB });

// Since v5.8.2+, you can get the processed videoTrack by passing processed = true

const processedVideoTrack = trtc.getVideoTrack({ processed: true });Parameters:

| Name | Type | Description | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

config |

string |

If not passed, get the local camera videoTrack Properties

|

Returns:

Video track

- Type

- MediaStreamTrack | null

getVideoSnapshot()

Get video snapshot

Notice: must play the video before it can obtain the snapshot. If there is no playback, an empty string will be returned.

- Since:

-

- 5.4.0

Example

// get self main stream video frame

trtc.getVideoSnapshot()

// get self sub stream video frame

trtc.getVideoSnapshot({streamType:TRTC.TYPE.STREAM_TYPE_SUB})

// get remote user main stream video frame

trtc.getVideoSnapshot({userId: 'remote userId', streamType:TRTC.TYPE.STREAM_TYPE_MAIN})Parameters:

| Name | Type | Description |

|---|---|---|

config.userId |

string |

required

Remote user ID |

config.streamType |

TRTC.TYPE.STREAM_TYPE_MAIN | TRTC.TYPE.STREAM_TYPE_SUB |

required

|

sendSEIMessage(buffer, optionsopt)

Send SEI Message

The header of a video frame has a header block called SEI. The principle of this interface is to use the SEI to embed the custom data you want to send along with the video frame. SEI messages can accompany video frames all the way to the live CDN.

Applicable scenarios: synchronization of lyrics, live answering questions, etc.

When to call: call after trtc.startLocalVideo or trtc.startLocalScreen when set 'toSubStream' option to true successfully.

Note:

- Maximum 1KB(Byte) sent in a single call, maximum 30 calls per second, maximum 8KB sent per second.

- Supported browsers: Chrome 86+, Edge 86+, Opera 72+, Safari 15.4+, Firefox 117+. Safari and Firefox are supported since v5.8.0.

- Since SEI is sent along with video frames, there is a possibility that video frames may be lost, and therefore SEI may be lost as well. The number of times it can be sent can be increased within the frequency limit, and the business side needs to do message de-duplication on the receiving side.

- SEI cannot be sent without trtc.startLocalVideo(or trtc.startLocalScreen when set 'toSubStream' option to true); SEI cannot be received without startRemoteVideo.

- Only H264 encoder is supported to send SEI.

- Since:

-

- v5.3.0

- See:

Example

// 1. enable SEI

const trtc = TRTC.create({

enableSEI: true

})

// 2. send SEI

try {

await trtc.enterRoom({

userId: 'user_1',

roomId: 12345,

})

await trtc.startLocalVideo();

const unit8Array = new Uint8Array([1, 2, 3]);

trtc.sendSEIMessage(unit8Array.buffer);

} catch(error) {

console.warn(error);

}

// 3. receive SEI

trtc.on(TRTC.EVENT.SEI_MESSAGE, event => {

console.warn(`sei ${event.data} from ${event.userId}`);

})Parameters:

| Name | Type | Description | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

buffer |

ArrayBuffer |

required

SEI data to be sent |

||||||||||||

options |

Object |

Properties

|

sendCustomMessage(message)

Send Custom Message to all remote users in the room.

Note:

- Only TRTC.TYPE.ROLE_ANCHOR can call sendCustomMessage.

- You should call this api after TRTC.enterRoom successfully.

- The custom message will be sent in order and as reliably as possible, but it's possible to loss messages in a very bad network. The receiver will also receive the message in order.

- Since:

-

- v5.6.0

- See:

-

- Listen for the event TRTC.EVENT.CUSTOM_MESSAGE to receive custom message.

Example

// send custom message

trtc.sendCustomMessage({

cmdId: 1,

data: new TextEncoder().encode('hello').buffer

});

// receive custom message

trtc.on(TRTC.EVENT.CUSTOM_MESSAGE, event => {

// event.userId: remote userId.

// event.cmdId: message cmdId.

// event.seq: message sequence number.

// event.data: custom message data, type is ArrayBuffer.

console.log(`received custom msg from ${event.userId}, message: ${new TextDecoder().decode(event.data)}`)

})Parameters:

| Name | Type | Description | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

message |

object |

required

Properties

|

(async) callExperimentalAPI(name, options)

call experimental API

| APIName | name | param |

|---|---|---|

| 'enableAudioFrameEvent' | Config the pcm data of Audio Frame Event | EnableAudioFrameEventOptions |

| 'pauseRemotePlayer' | Pause the remote player(includes audio and video) | PauseRemotePlayerOptions |

| 'resumeRemotePlayer' | Resume the remote player(includes audio and video) | ResumeRemotePlayerOptions |

Example

// Call back the pcm data of the remote user 'user_A'. The default pcm data is 48kHZ, mono

await trtc.callExperimentalAPI('enableAudioFrameEvent', { enable: true, userId: 'user_A'})

// Call back all remote pcm data and set the pcm data to 16kHZ, stereo

await trtc.callExperimentalAPI('enableAudioFrameEvent', { enable: true, userId: '*', sampleRate: 16000, channelCount: 2 })

// Set the MessagePort for the local microphone pcm data callback

await trtc.callExperimentalAPI('enableAudioFrameEvent', { enable: true, userId: '', port })

// Cancel callback of local microphone pcm data

await trtc.callExperimentalAPI('enableAudioFrameEvent', { enable: false, userId: '' })Parameters:

| Name | Type | Description |

|---|---|---|

name |

string |

required

|

options |

EnableAudioFrameEventOptions | ResumeRemotePlayerOptions | PauseRemotePlayerOptions |

required

|

(static) setLogLevel(levelopt, enableUploadLogopt)

Set the log output level

It is recommended to set the DEBUG level during development and testing, which includes detailed prompt information.

The default output level is INFO, which includes the log information of the main functions of the SDK.

Example

// Output log levels above DEBUG

TRTC.setLogLevel(1);Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

level |

0-5 |

Log output level 0: TRACE 1: DEBUG 2: INFO 3: WARN 4: ERROR 5: NONE |

|

enableUploadLog |

boolean |

true

|

Whether to enable log upload, which is enabled by default. It is not recommended to turn it off, which will affect problem troubleshooting. |

(static) isSupported() → {Promise.<object>}

Check if the TRTC Web SDK is supported by the current browser

- Reference: Browsers Supported.

Example

TRTC.isSupported().then((checkResult) => {

if(!checkResult.result) {

console.log('checkResult', checkResult.result, 'checkDetail', checkResult.detail);

// The SDK does not support the current browser. Guide the user to use the latest version of Chrome.

}

const { isBrowserSupported, isWebRTCSupported, isMediaDevicesSupported, isH264EncodeSupported, isH264DecodeSupported } = checkResult.detail;

// Different business scenarios may require varying levels of detection granularity, such as only needing pushing or pulling stream.

// Detect whether the browser supports capturing the camera and microphone for upstreaming.

const isPushMicrophoneSupported = isBrowserSupported && isWebRTCSupported && isMediaDevicesSupported;

const isPushCameraSupported = isBrowserSupported && isWebRTCSupported && isMediaDevicesSupported && isH264EncodeSupported;

// Detect whether the browser supports pulling stream.

const isPullAudioSupported = isBrowserSupported && isWebRTCSupported;

const isPullVideoSupported = isBrowserSupported && isWebRTCSupported && isH264DecodeSupported;

});Returns:

Promise returns the detection result

| Property | Type | Description |

|---|---|---|

| checkResult.result | boolean | If true, it means the current environment supports the basic ability to capture the camera and microphone for streaming. |

| checkResult.detail.isBrowserSupported | boolean | Whether the current browser is supported by the SDK |

| checkResult.detail.isWebRTCSupported | boolean | Whether the current browser supports WebRTC |

| checkResult.detail.isWebCodecsSupported | boolean | Whether the current browser supports WebCodecs |

| checkResult.detail.isMediaDevicesSupported | boolean | Whether the current browser supports accessing media devices and capturing microphone/camera |

| checkResult.detail.isScreenShareSupported | boolean | Whether the current browser supports screen sharing functionality |

| checkResult.detail.isSmallStreamSupported | boolean | Whether the current browser supports small streams |

| checkResult.detail.isH264EncodeSupported | boolean | Whether the current browser supports H264 encoding |

| checkResult.detail.isH264DecodeSupported | boolean | Whether the current browser supports H264 decoding |

| checkResult.detail.isVp8EncodeSupported | boolean | Whether the current browser supports VP8 encoding |

| checkResult.detail.isVp8DecodeSupported | boolean | Whether the current browser supports VP8 decoding |

- Type

- Promise.<object>

(async, static) getPermissions(option) → {Promise.<PermissionResult>}

Get the permission state of the camera and microphone.

- Since:

-

- v5.13.0

Example

const { camera, microphone } = await TRTC.getPermissions({

request: true, // if true, will request permission to use the camera and microphone.

types: ['camera', 'microphone']

});

console.log(camera, microphone); // 'granted' | 'denied' | 'prompt' | null. null means the permission is not supported query.Parameters:

| Name | Type | Description | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

option |

PermissionOption |

required

Properties

|

Returns:

Promise returns the permission state of the camera and microphone

- Type

- Promise.<PermissionResult>

(static) getCameraList(requestPermissionopt) → {Promise.<Array.<MediaDeviceInfo>>}

Returns the list of camera devices

Note

- This interface does not support use under the http protocol, please use the https protocol to deploy your website. Privacy and security

- You can call the browser's native interface getCapabilities to get the maximum resolutions supported by the camera, frame rate, and distinguish between front and rear cameras on mobile devices, etc. This interface supports Chrome 67+, Edge 79+, Safari 17+, Opera 54+.

- On Huawei phones, the rear camera may capture the telephoto lens. In this case, you can use this interface to obtain the rear camera with the largest supported resolution. Generally, the main camera of a phone has the largest resolution.

Example

const cameraList = await TRTC.getCameraList();

if (cameraList[0] && cameraList[0].getCapabilities) {

const { width, height, frameRate, facingMode } = cameraList[0].getCapabilities();

console.log(width.max, height.max, frameRate.max);

if (facingMode) {

if (facingMode[0] === 'user') {

// front camera

} else if (facingMode[0] === 'environment') {

// rear camera

}

}

}Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

requestPermission |

boolean |

true

|

|

Returns:

Promise returns an array of MediaDeviceInfo

- Type

- Promise.<Array.<MediaDeviceInfo>>

(static) getMicrophoneList(requestPermissionopt) → {Promise.<Array.<MediaDeviceInfo>>}

Returns the list of microphone devices

Note

- This interface does not support use under the http protocol, please use the https protocol to deploy your website. Privacy and security

- You can call the browser's native interface getCapabilities to get information about the microphone's capabilities, e.g. the maximum number of channels supported, etc. This interface supports Chrome 67+, Edge 79+, Safari 17+, Opera 54+.

- On Android, there are usually multiple microphones, and the label list is: ['default', 'Speakerphone', 'Headset earpiece'], if you do not specify the microphone in trtc.startLocalAudio, the browser default microphone may be the Headset earpiece and the sound will come out of the headset. If you need to play out through the speaker, you need to specify the microphone with the label 'Speakerphone'.

Example

const microphoneList = await TRTC.getMicrophoneList();

if (microphoneList[0] && microphoneList[0].getCapabilities) {

const { channelCount } = microphoneList[0].getCapabilities();

console.log(channelCount.max);

}Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

requestPermission |

boolean |

true

|

|

Returns:

Promise returns an array of MediaDeviceInfo

- Type

- Promise.<Array.<MediaDeviceInfo>>

(static) getSpeakerList(requestPermissionopt) → {Promise.<Array.<MediaDeviceInfo>>}

Returns the list of speaker devices.

- Only support PC browser.

- Limited support on mobile devices. Currently, it is only known to work on OpenHarmony NEXT and iOS 26+.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

requestPermission |

boolean |

true

|

|

Returns:

Promise returns an array of MediaDeviceInfo

- Type

- Promise.<Array.<MediaDeviceInfo>>

(async, static) setCurrentSpeaker(speakerId)

Set the current speaker for audio playback

Note

- This interface only supports PC, Android devices, OpenHarmony NEXT devices.

- Android device requirements:

- SDK version >= 5.9.0

- Support switching between speaker and headset, i.e. pass in TRTC.TYPE.SPEAKER or TRTC.TYPE.HEADSET

- Need to call startLocalAudio() to collect microphone first

- May not work on some Android devices - use with caution

- OpenHarmony NEXT device requirements:

- OS version >= 5.0

Example

// For PC and OpenHarmony NEXT devices

TRTC.setCurrentSpeaker('your_expected_speaker_id');

// For Android(sdk version >= 5.9.0)

TRTC.setCurrentSpeaker(TRTC.TYPE.SPEAKER); // Switch to speaker

TRTC.setCurrentSpeaker(TRTC.TYPE.HEADSET); // Or switch to headsetParameters:

| Name | Type | Description |

|---|---|---|

speakerId |

string | TRTC.TYPE.SPEAKER | TRTC.TYPE.HEADSET |

required

Speaker ID, support passing in speaker ID or TRTC.TYPE.SPEAKER or TRTC.TYPE.HEADSET |